Controlling The Output of Generative AI Systems is indeed important.

Self-learning systems known as generative AIs have become popular around the globe.

Some of these advanced models can generate text, graphical, musical, and even video data with the help of directions only.

They’re disrupting industries from entertainment to marketing, and research too. But as we continue to harness the creative potential of these systems, there’s a growing concern: How do we monitor what they come up with?

Given the overwhelming capability and potential of AI, there is a need to guarantee that the implications of AI are moral, correct, and non-threatening.

It is no longer enough to come up with new content each and every time.

Accuracy of the content can also be defined in regards to how it reflects certain values of society and does not reinforce prejudices, lies, or cause legal problems.

In this post, we will learn why it is important to regulate the output created by generative AI and why both developers and end users should be careful of what is generated by these systems.

- Generative AI Systems

- What Are The Potential Risks of Uncontrolled Generative AI System Outputs?

- Ethical Play a Role-Generative AI Output

- Why Importance of Human Oversight Over Generative AI?

- What Are The Regulatory and Legal Implications?

- What Are The Strategies to Control Generative AI Outputs?

- What Are The Benefits of Controlled Generative AI Outputs?

- Conclusion

| Feature | Controlled Generative AI Output | Uncontrolled Generative AI Output |

|---|---|---|

| Definition | Generative AI that produces outputs following specific guidelines or constraints. | Generative AI that creates outputs freely, with minimal constraints. |

| Level of Customization | High – allows for detailed control over content, style, and scope. | Low to Moderate – produces results based on initial prompts but lacks specific control. |

| Application Suitability | Ideal for professional or industry-specific tasks needing consistency. | Suited for creative exploration, brainstorming, or entertainment. |

| Risk of Inappropriate Output | Low – filters and constraints minimize harmful or unsuitable outputs. | High – without constraints, the model may produce irrelevant or sensitive content. |

| User Input Requirements | Often requires clear, detailed inputs to define boundaries and preferences. | Typically needs only a prompt, making it easy to use but with unpredictable results. |

| Reliability of Outputs | High – generates consistent, repeatable results aligned with the set parameters. | Variable – outputs may change significantly with each run, leading to unique but unpredictable results. |

| Use Cases | Useful for customer service, healthcare, educational content, and regulated industries. | Commonly used for art, storytelling, experimental projects, and open-ended tasks. |

| Model Constraints | Operates with specific rules or guardrails, potentially supervised by user-defined filters. | Runs with fewer or no restrictions, relying mainly on the model’s training data. |

| Training Requirements | May require fine-tuning on curated datasets to match desired outputs closely. | Can use general, large-scale datasets, allowing for broader creativity. |

| Example Models | ChatGPT with safety layers, Stable Diffusion with prompt moderation | DALL-E or Midjourney without filtering, open-ended GPT-4 responses |

Generative AI Systems

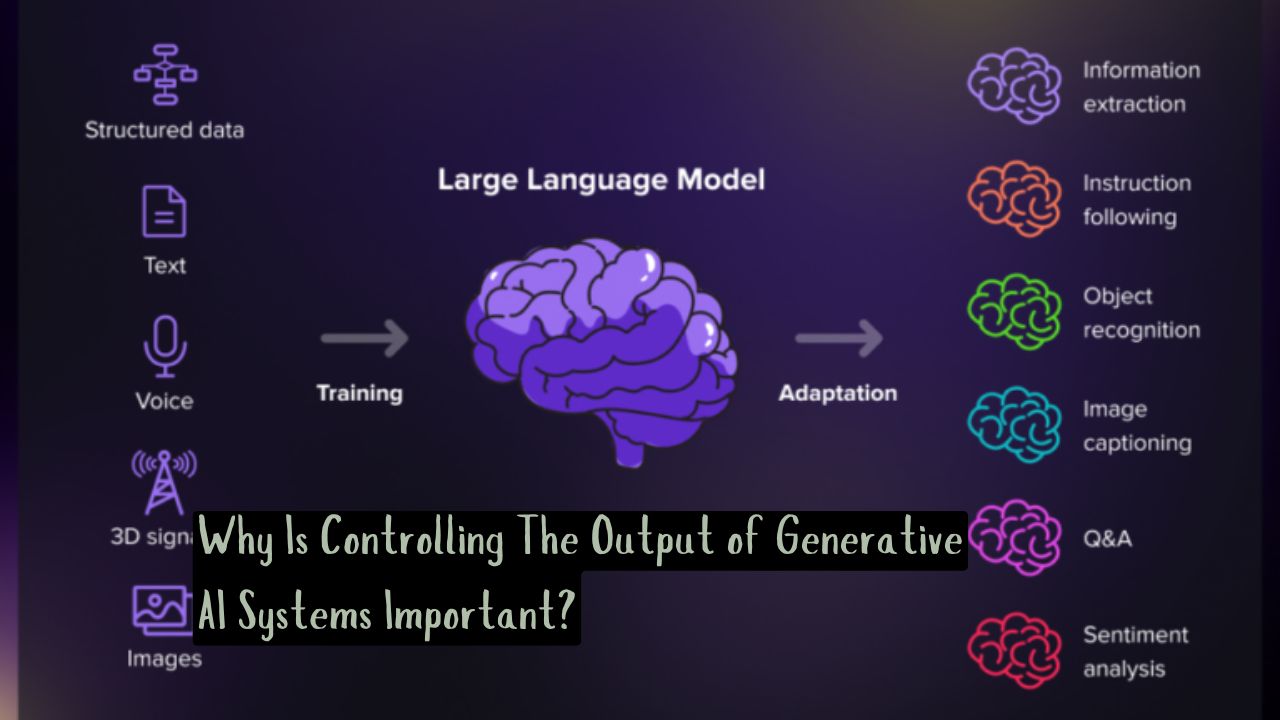

Generative AI also known as generative models is a category of AI where the model can produce new content based on the contents the model learned.

That is why it is helpful to imagine examples such as OpenAI’s GPT-4, capable of writing an article or answering a question, or Stable Diffusion capable of generating an image based on a text description.

These are sophisticated systems that employ algorithms to make forecasts or create compositions that look like human Designs.

These systems employ PC algorithms to estimate or produce content in such a manner that they can closely approximate human Designs.

Despite all of these technologies having seemingly endless amounts of creativity within them, that is the very reason we must reign them in, they can create content on their own.

As these functions work, positive behaviors from them are a norm, but negative results can arise due to misguided direction in their activities.

What Are The Potential Risks of Uncontrolled Generative AI System Outputs?

Just think of an AI concierge that tells you fake stories or reiterates unpleasant clichés–freezing, isn’t it?

Well, these are real risks when generative AI systems are left unaddressed like the ones used in the past few weeks.

There is, however, one issue professionals think is significant and that is the issue of misinformation.

AI can generate completely fake but seem like real news articles, fake images, or fake information which may reach many people via the internet specifically social media platforms.

Another major concern is prejudice and discrimination, Discrimination is likewise a critical social risk and can result in sizeable monetary misfortunes for associations.

AI models exclusively get trained through datasets they are provided with, and if those datasets harbor biased perceptions.

It will also transfer those biases in its outputs since the AI is also coded to work based on some given biased parameters.

This could lead to the creation of content with unintended messages of harm such as stereotyping of certain groups or exclusion of these groups.

Last on the list is copyright infringement. As soon as AI produces content that is virtually indistinguishable from previously existing works, in such genres as textual, musical, or graphic, critical legal issues arise over ownership and copyright.

Ethical Play a Role-Generative AI Output

The issue of controlling the output of AI has ethics at its epicenter, Of course, there is always the issue with such systems which is the ability to produce undesired or even obscene results.

An AI model is capable of generating violent or obscene content that forms real-life vices when not controlled by efficient control mechanisms.

So, ethics plays the key role of generative ai output.

The fact is that accountabilities are also an issue: That question brings us back to the realization that it is still highly uncertain who is actually pulled into the driving seat when an AI system such as the one described in this paper messes up.

Once again, is it the app developer, the user, or the AI itself?

The problem lies back on the creators and users of AI since it itself does not possess the capability to understand its own existence.

And this is not just about making these systems act correctly, but also about making them act in ways that people will consider ethical.

Why Importance of Human Oversight Over Generative AI?

Smart though AI may be, it definitely requires the human touch. This is where human in the loop systems come in The human in the loop here refers to the human interactivity in the loop where they have to make decisions.

Thus, we should always engage the people in making decisions because that is the only way we can prevent the outputs produced by the AI from being absolutely pure in form yet completely wrong.

Of course, human moderators can intervene and examine AI content before it gets out on the open web.

A closely tied concept to managing AI output is the AI guardrails / AI regulation. These are the virtues or rules set under the AI to prevent it from acting or making the wrong or undesired decision.

For instance, an AI chatbot may be programmed to avoid the use of certain words or to not engage in certain topics like religion.

What Are The Regulatory and Legal Implications?

As regards the legal aspect, authorities, and various regulatory agencies are gradually turning their attention.

Some ways that AI production and usage can be closer to respecting privacy and data security include recent frameworks such as the GDPR and CCPA.

However, as AI becomes more advanced, there will be a necessity for better regulations surrounding the new challenges posed by generative systems.

Subsequent legislation will seek to increase the openness of AI systems, be of particular responsibility for their creators for what is generated, and bar AI-forged textual content.

This will assist in achieving an equilibrium between the two valuable objectives as follows.

What Are The Strategies to Control Generative AI Outputs?

Hence, the questions that arise are: How do we actually regulate its outputs?

One of the good strategies is through the management of the training data.

This means that AI system creators need to be cautious when choosing the data that they feed into the system because this kind of learning may lead to a product that generates restricted and/or damaging outputs.

Another method is that there are bias detection means that are able to mark certain outputs as potentially incorrect.

Another essential concept is content moderation. The same way people use social media and moderate every post that is having a tendency of appearing online, AI-generated posts should also be moderated.

This keeps out unpleasant images from being introduced to the children who may have slipped through other loopholes.

What Are The Benefits of Controlled Generative AI Outputs?

When generative AI systems are properly controlled, the benefits are huge. For one, we get safer and more reliable AI-generated content.

This means fewer chances of misinformation, bias, or legal trouble. Controlling outputs also enables us to enhance creativity while maintaining ethical standards.

Lastly, controlled AI fosters trust. When users know that the AI is being monitored and its outputs are aligned with ethical guidelines, they’re more likely to feel comfortable using the technology.

Conclusion

In a world of great power shift, where AI is already landscaping our societies, controlling the outputs of generative AI systems is not just desirable — it is relevant.

That’s where the need for regulation is quite evident in order to prevent bias, protect against fake news, and for compliance with the law.

Thus, any disruptive and progressive change will be determined by how further upgrades of the AI technology will be handled in future. But, let it be clear that we are right about this.